|

I am a second-year master student at Institute of Software, Chinese Academy of Sciences, supervised by Prof. Hui Chen from ISCAS and Ming Lu from Intel Labs China. I received my B.S. in Computer Science from University of Science and Technology, Beijing in 2023 and obtained Beijing Distinguished Graduate Award and Beijing Outstanding Graduation Thesis. I serve as a reviewer for international conferences including ICLR, ICME and ISMAR. My research focuses on the following areas:

|

|

|

|

|

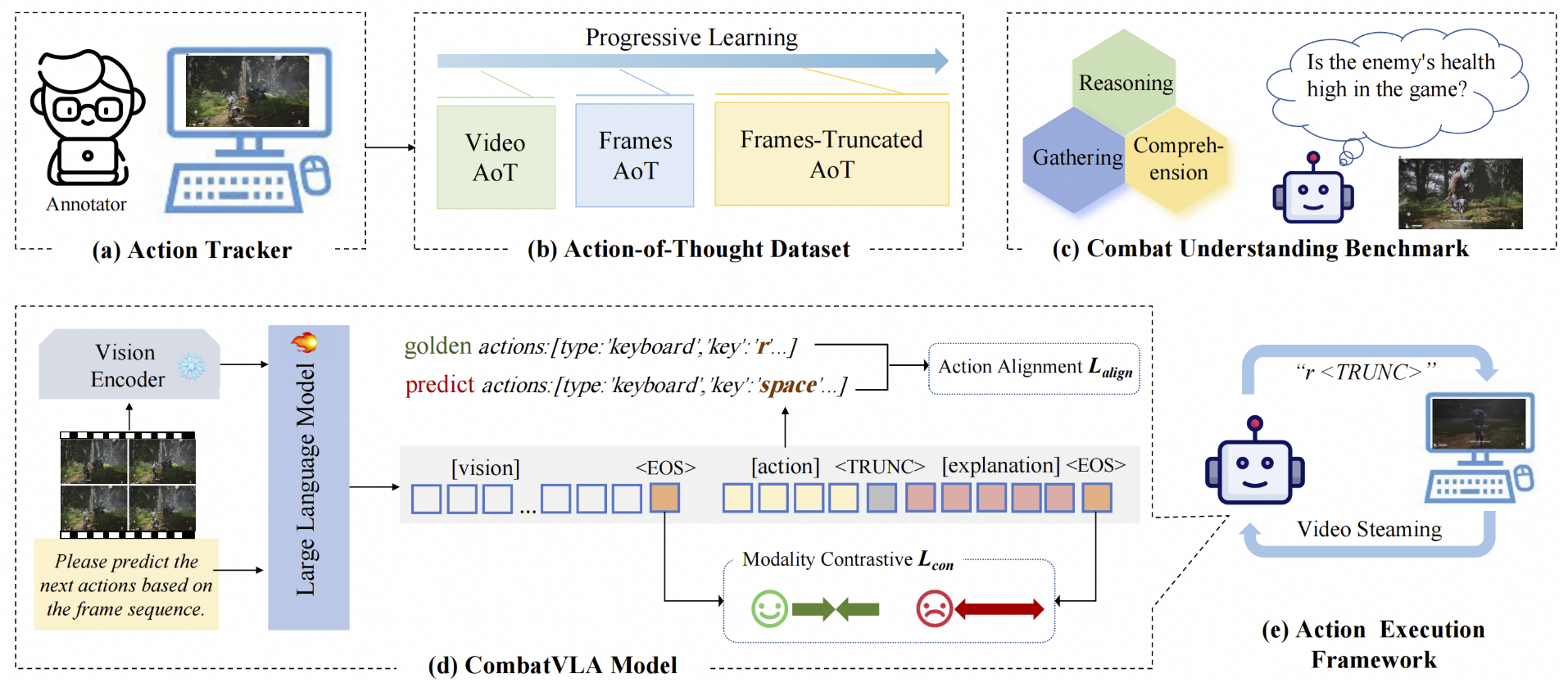

Peng Chen*, Pi Bu*, Yingyao Wang, Xinyi Wang, Ziming Wang, Jie Guo, Yingxiu Zhao, Qi Zhu, Jun Song, Siran Yang, Jiamang Wang, Bo Zheng Paper / Project / Code We propose CombatVLA, the first efficient visual-language action model designed for combat tasks in 3D action role-playing games. For efficient decision making, our CombatVLA is a 3B model that processes visual inputs and outputs a sequence of actions to control the game (including keyboard and mouse operations). |

|

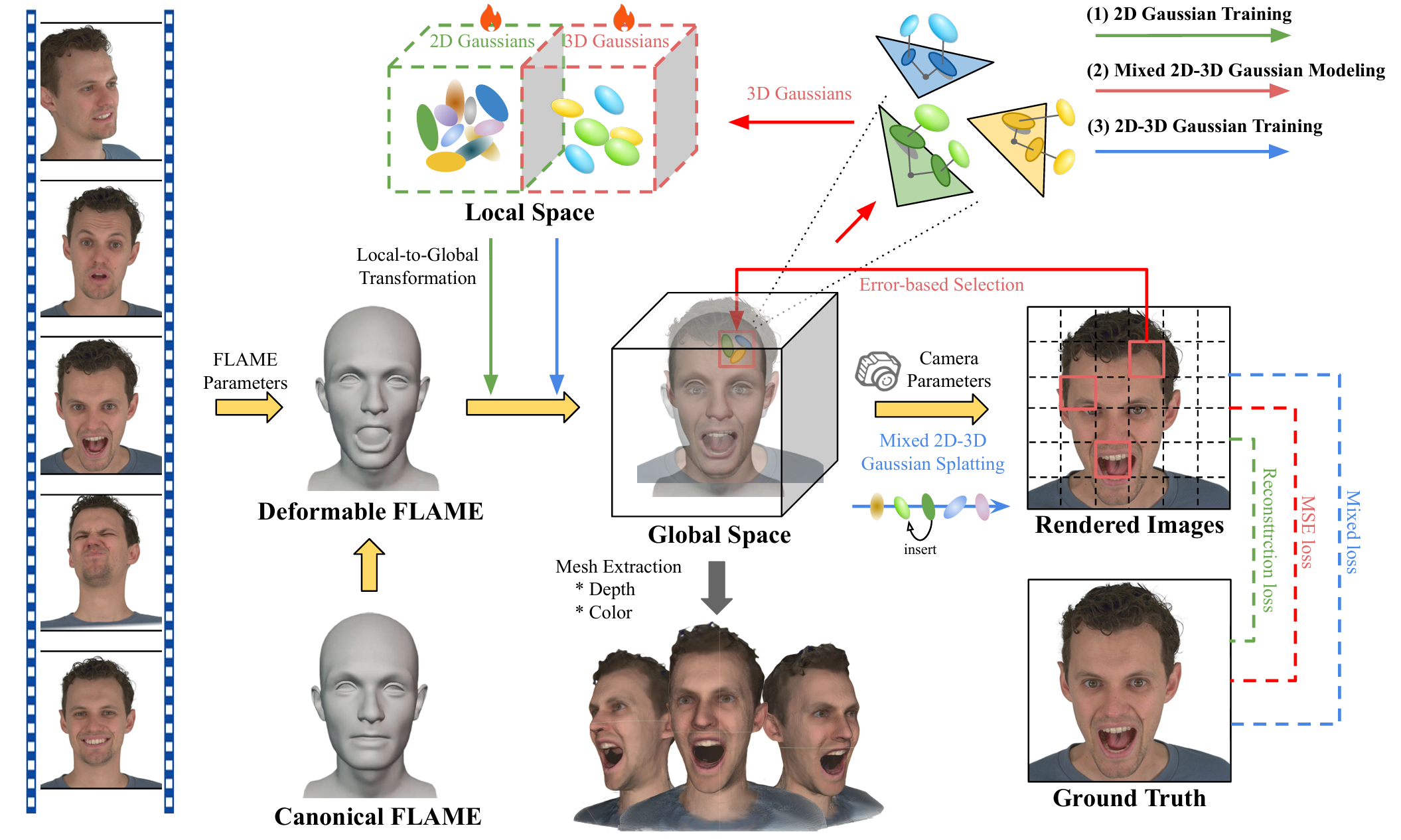

Peng Chen, Xiaobao Wei, Qingpo Wuwu, Xinyi Wang, Xingyu Xiao, Ming Lu Paper / Project / Code We use 2DGS to maintain the surface geometry and employ 3DGS for color correction in areas where the rendering quality of 2DGS is insufficient, reconstructing a realistically and geometrically accurate 3D head avatar. |

|

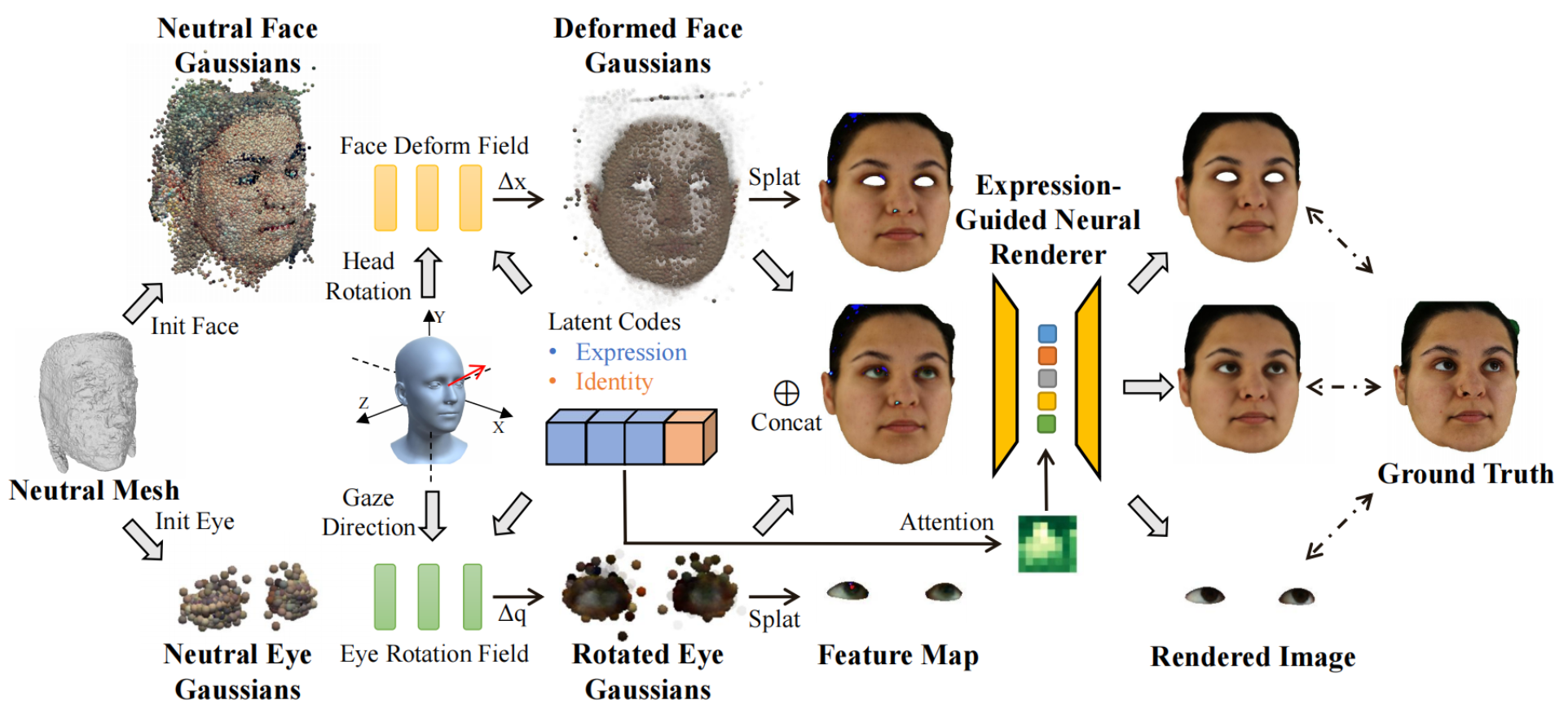

Xiaobao Wei, Peng Chen, Guangyu Li, Ming Lu, Hui Chen, Feng Tian Paper / Project / Code We propose GazeGaussian, a high-fidelity gaze redirection method that uses a two-stream 3DGS model to represent the face and eye regions separately. |

|

|

Xiaobao Wei, Peng Chen, Ming Lu, Hui Chen, Feng Tian Paper / Project / Code We propose GraphAvatar, a compact method using Graph Neural Networks (GNN) to generate 3D Gaussians for head avatar animation, offering superior rendering performance and minimal storage requirements. |

|

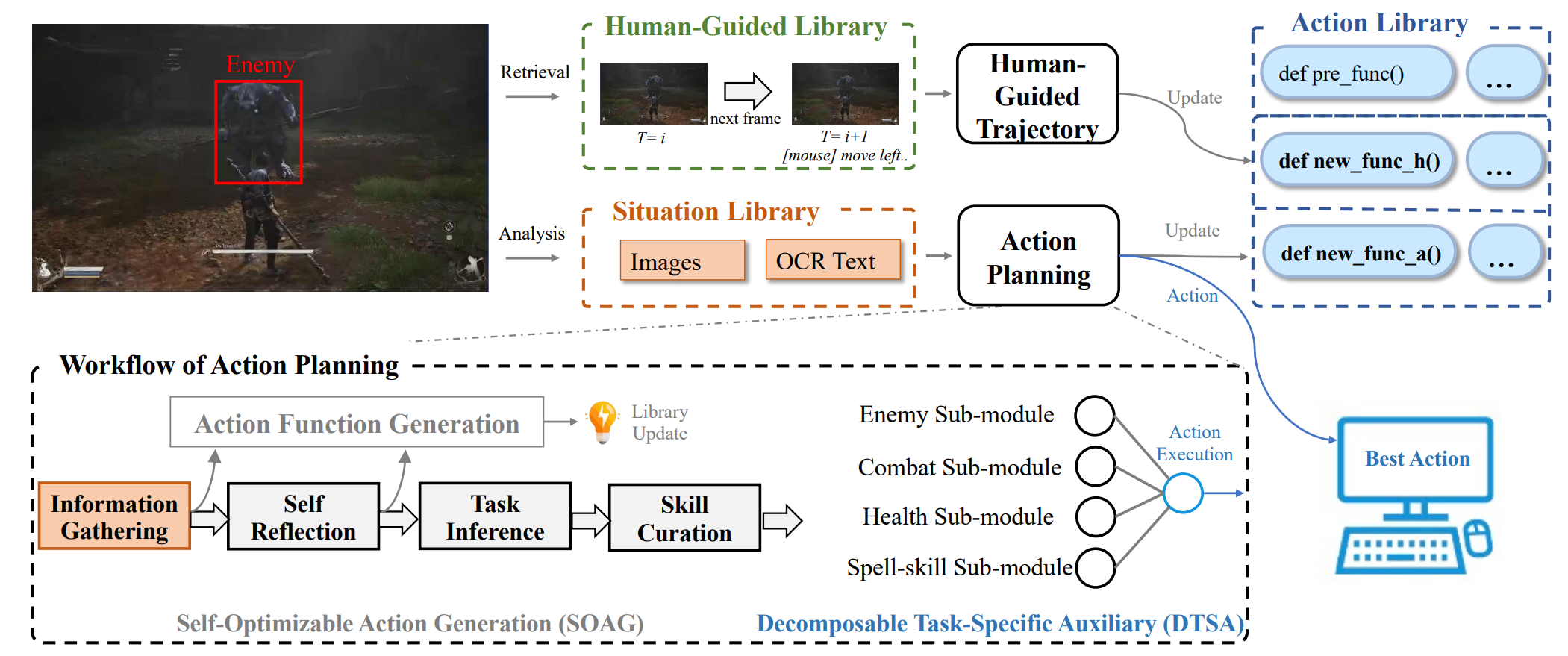

Peng Chen*, Pi Bu*, Jun Song, Yuan Gao, Bo Zheng Paper / Project We propose a novel framework named the VARP agent, which directly takes game screenshots as input and generates keyboard and mouse operations to play the ARPG. |

|

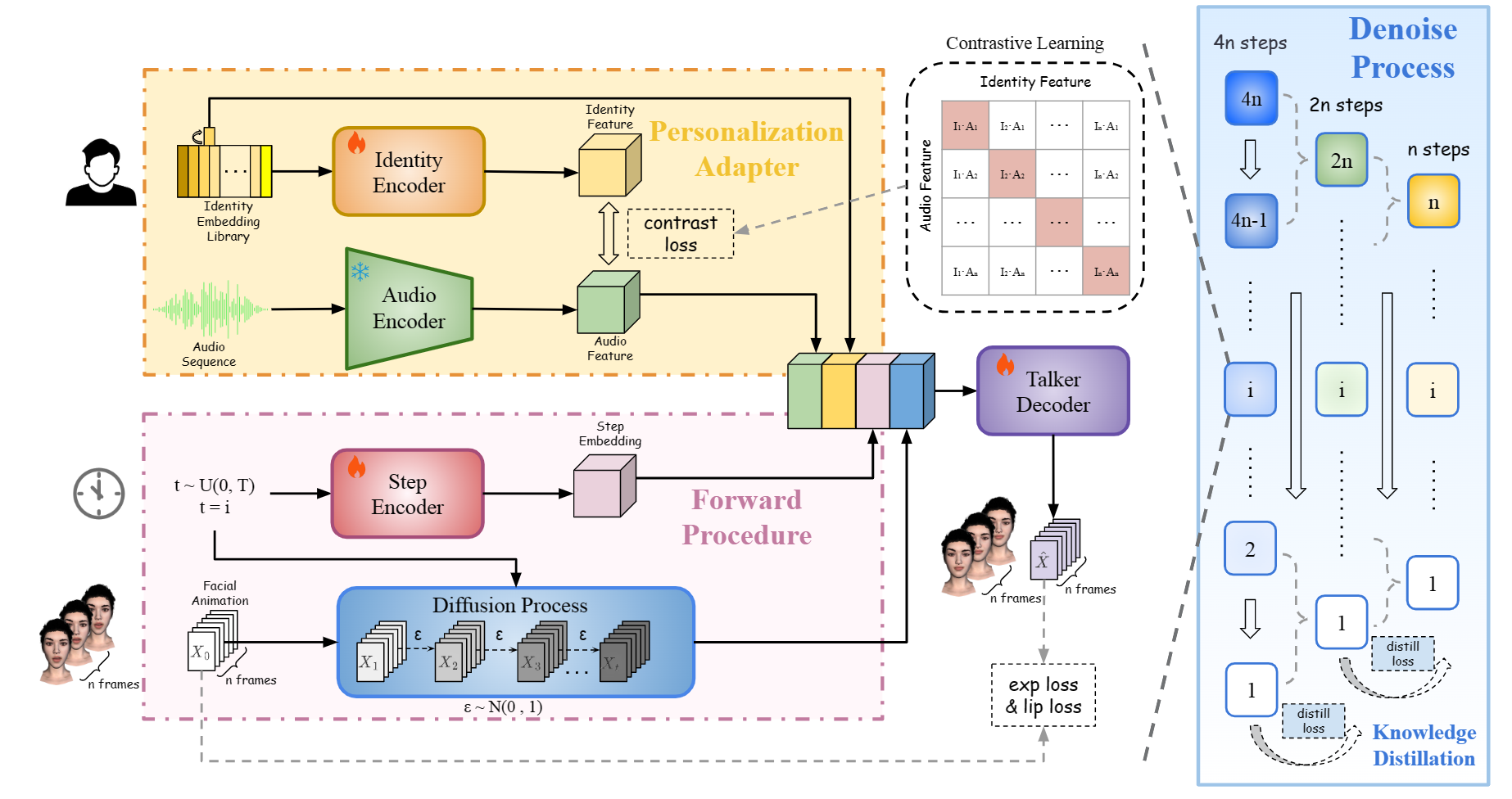

Peng Chen, Xiaobao Wei, Ming Lu, Hui Chen, Feng Tian Paper / Project / Code We propose DiffusionTalker, a diffusion-based method that utilizes contrastive personalizer to generate personalized 3D facial animation and personalizer-guided distillation for acceleration and compression. |

|

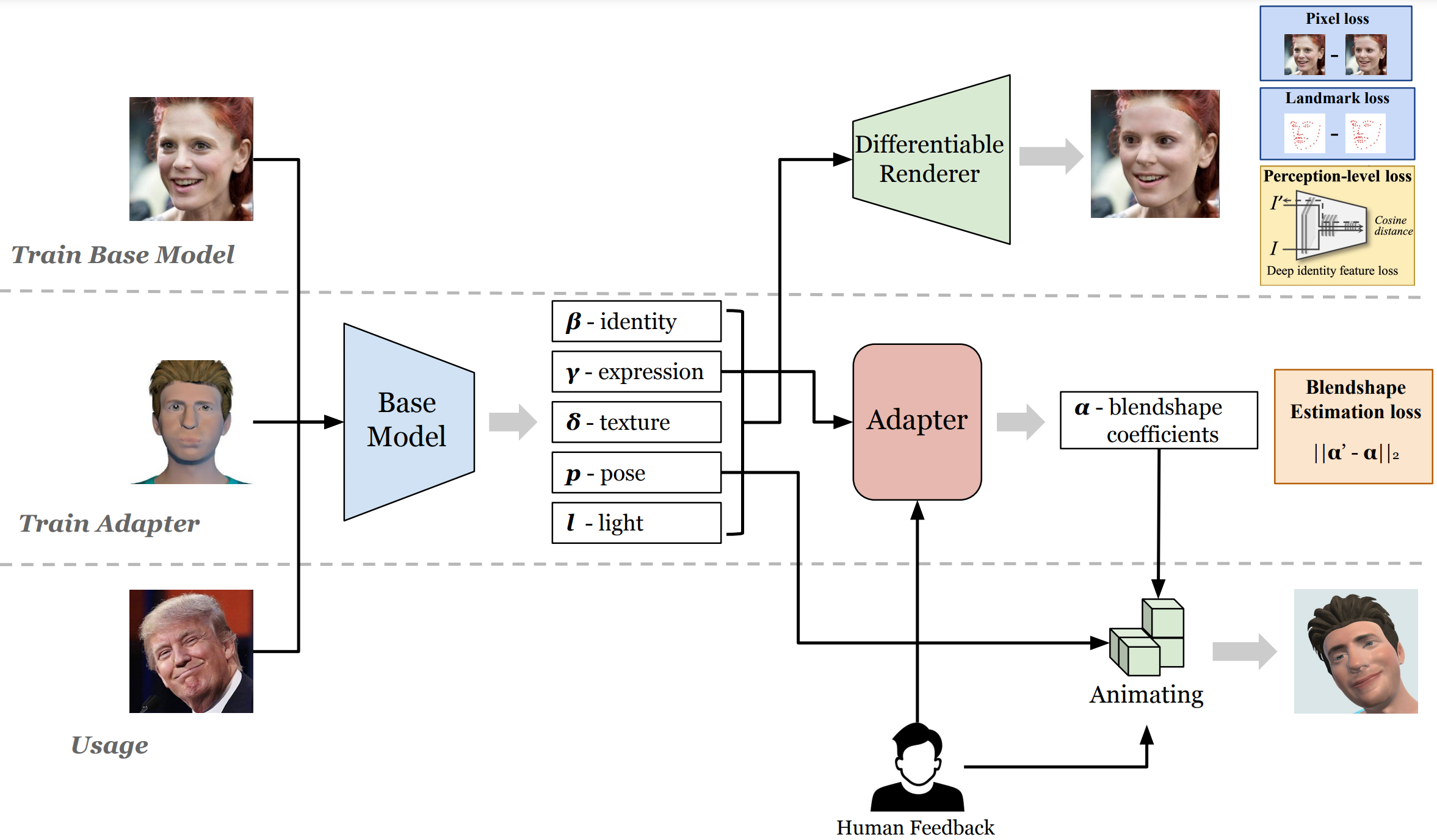

Zechen Bai*, Peng Chen*, Xiaolan Peng, Lu Liu, Naiming Yao, Hui Chen, Feng Tian Paper / Code Given a target facial video as reference, bring your own character into our solution integrated with Unity3D, it automatically generates facial animation for the virtual character. |

|

|

-

[04/2024 - 03/2025] Alibaba, Taotian

Research intern for MLLM, focusing on MLLM-based VLA agents, including enhancing Qwen-VL series and developing advanced applications.

-

[11/2023 - 04/2024] AMD, Xilinx AI

Research intern for Diffusion-based AIGC, especially focused on improving ControlNet and Stable Diffusion for image generation.

-

[07/2023 - 08/2023] Baidu, ACG

Research intern for LLM evaluation, focusing on the automated evaluation of text-based question-answering tasks for the Wenxin large language model and reward model.

|

|

- [06/2023] Beijing Outstanding Graduation Design (Thesis), 2023.

- [06/2023] Beijing Distinguished Graduate Award, 2023.

|

|

Friends (click to expand, random order) |